Tailoring is fancy, sufficiently fancy that you may go your entire life and never once experience the art. It’s expensive, having garments custom-made to suit your body shape, even if there are a legion of benefits in doing so. Mass-produced clothes, meanwhile, are never going to do the job if you’ve got a body that diverges from what’s expected or treated as “normal.”

There are two real problems: Measurement, and manufacturing, issues that the fashion industry is wrestling with right now. A Taiwanese company, TG3D, has at least discovered a way to solve the first part of the equation with little more than an iPhone. It has developed a method of using FaceID to scan the geography of your body to give you a suite of measurements in minutes.

I first encountered TG3D back in 2018, when the company was showing off its wares at Computex in Taipei. The system then required you to step into a booth the size of a changing room, which housed pillars full of infrared cameras. When activated, the system would scan your body and help you determine the ideal sizes for trying on clothes.

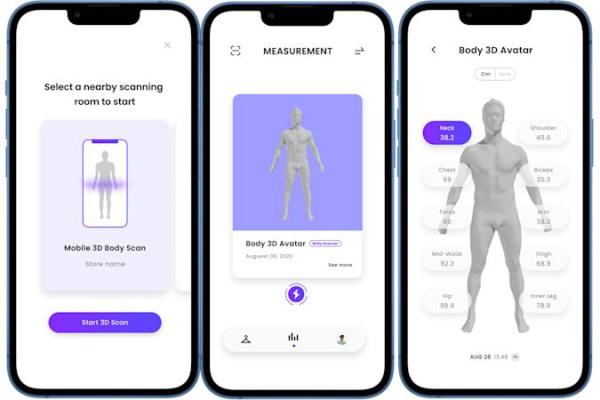

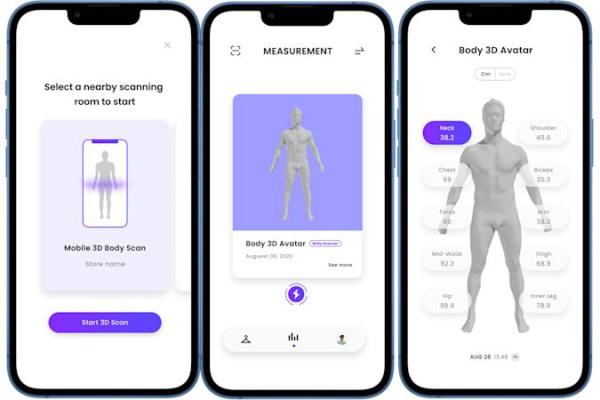

Since then, the company has been working to shrink this technology down to something that requires a lot less investment. Any FaceID-equipped iPhone can now offer a similar, albeit less accurate, scanning solution, enabling users to test sizes for off-the-peg clothes. Co-founder Rick Yu explained that the project was designed for ready-to-wear fashion, to “solve [the issue of] huge returns.”

Returns are, after all, a key problem for e-commerce fashion brands, since buyers can’t be sure that their preferred size will actually fit them. “A lot of consumers buy three different sizes and return the other two,” which is bad for both the planet and most retailers’ bottom lines. If you know ahead of time what you need to order, the wastage and expense should decrease.

I tested the system and found that, much like it did in 2018, it reminded me how much timber I need to drop from my waistline. All you need to do, however, is stand your phone up on a flat surface – and it does need to be perfectly flat, so grab a book or some sticky tack. Then, just stand in view of the camera, ideally just in your underwear and, when ready, start turning around on the spot with your arms away from your sides. All in all, the scanning process takes less than a minute, and the analysis only takes a further two or three.

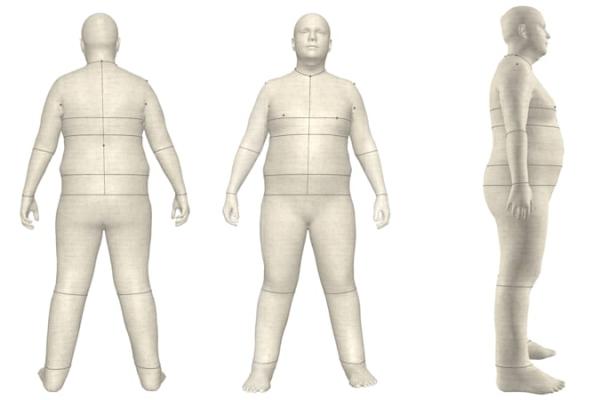

Once you’ve previewed your avatar to check it is more or less in the right shape, you can then send it off to the cloud to be properly analyzed. You may notice that, in use, your face, hands and feet are replaced with something blank and generic. This, says Yu, was an intentional move to protect user privacy given that you’ll be partially-clothed during the scanning process.

The data produced by FaceID isn’t, by itself, accurate enough to produce a fully-measured avatar, however. Once captured, it’s sent to TG3D’s server to be analyzed, “we have an AI engine that identifies the landmarks,” explained Yu. “We identify the landmarks, we position the landmarks and then based on [that], we extract up to 250 measurements automatically,” he said.

Much of this data, and the conclusions generated from it, have been curated through years of interviews with tailors and pattern makers. “These measurements that make sense to them,” said Yu, when I asked for an explanation for some of the more arcane terminology. Yu explained that the margin of error using the iPhone system is, at most, 1.3 inches.

Yu also explained that the data can be exported in a variety of formats, so as well as being used for tailoring, there are other solutions in play. For instance, an avatar file could be exported in a .OBJ file which can be used for 3D modeling and sculpting. And, naturally, it’s also possible to capture this data and create an avatar for any potential metaverse that could require it.

TG3D’s solution isn’t the only thing on the market, and plenty of other companies are operating in this space looking for a magic bullet. Shopify, for instance, was recently granted a patent for a body-measuring concept to help folks choose garments. Amazon’s Echo Look had a fairly rudimentary system to judge a fit based on how well it flattered your body. MTailor offers a scanning service by analyzing a video clip its users upload for similar results.

All of this is going to be vital in order to help reduce fashion’s already problematic waste problem. The industry reportedly consumes 10 percent of the world’s total greenhouse emissions and 20 percent of its water. That’s both down to sourcing and manufacturing through the waste involved in the buying and returns process when it reaches our homes.

But the consequences of this overproduction and overconsumption are piling up. One fairly pernicious example is blighting the Atacama desert in Chile. Garments made in South Asia will first be sold in Europe and the US, before the unsold sock is sent to South America for resale. Anything that remains unsold is dumped in huge piles, left to rot in the daylight with the price tags still on. This isn’t the only example of this, however, and there are toxic waste piles piling high in Ghana right now.

Measurements are only half the problem, and manufacturing still remains a huge issue with the industry today. Attempts by companies to automate this process have not been successful – Adidas’ Speedfactory concept, for instance, was abandoned back in 2019.

Yu, whose technology has most prominently been used by H&M in its flagship Stockholm store to create custom jeans with partner Unspun, also waxed lyrical about the future of fashion. He showed me the concept of an online retailer that was entirely virtual. A user can plug their body scan into the outfits on screen and get instant previews of how they would look in them. But this could happen before the garment is even made, ensuring that only what’s good gets produced.

For now, I can be confident that my iPhone, at least, knows my inside leg measurement. The next step is for every fashion brand to work out how to stop my thighs rubbing the seat of my jeans to dust.